Top 10 AI Tools for Musicians

Discover how AI music is made, from data training to composition, and how it’s changing the way we create and hear music.

Consider this: you open your favorite music app, and the song playing sounds eerily familiar. Upon inspection, you discover it’s an AI cover of one of your all-time favorite tracks. You think it’s just one of those new fads, but you can’t help but wonder: How do they make these things? How to make AI music covers anyway? If you’ve ever pondered similar questions, you’re not alone.

As the technology behind AI-generated music improves, so does our curiosity about how it works. This guide will help you clear up the mystery and understand the steps in creating these unique tunes. Knowing how AI music works can help you achieve your goals, like making your own AI covers. SendFame’s AI content maker is a valuable tool for creating unique AI music covers. The platform can help you better understand the steps in AI-generated music while allowing you to produce your music covers quickly.

AI-generated music refers to musical compositions created with artificial intelligence technologies, distinguishing itself from traditional music production by involving algorithms and machine learning models rather than solely human creativity and manual skill.

Traditional music production is a human-driven process where composers and musicians use their creativity, experience, and technical skills to craft melodies, harmonies, rhythms, and arrangements over hours or days. This method emphasizes personal expression, emotional depth, and cultural context, often requiring significant time and resources.

In contrast, AI-generated music leverages sophisticated algorithms trained on vast datasets of existing music. These AI systems analyze patterns in rhythm, melody, harmony, and genre-specific features to generate new compositions autonomously, sometimes mimicking particular styles or creating novel musical pieces. The process can produce music much faster than traditional methods in minutes instead of days, allowing human users to personalize and modify the output to reflect their artistic vision.

AI music generation relies on advanced machine learning models such as:

Neural networks form the backbone of AI music generation, with deep learning models trained on vast datasets of musical works spanning genres, instruments, and historical periods. These models analyze pitch, rhythm, tempo, and harmony patterns by processing symbolic representations like MIDI files or raw audio spectrograms.

For instance, Google’s Music Transformer model employs self-attention mechanisms to capture long-term musical structures, enabling it to generate coherent, minute-long compositions that mirror the stylistic nuances of classical or jazz music. By learning hierarchical relationships within the data, such as how a melody evolves or how chords resolve, these networks synthesize new sequences that adhere to musical grammar while introducing novel variations.

The effectiveness of these models hinges on the diversity and quality of training data. Datasets often include thousands of hours of music, ranging from Bach chorales to contemporary pop tracks, allowing the AI to internalize genre-specific conventions. Preprocessing steps, such as tokenizing musical notes or normalizing tempos, ensure the input is structured for optimal learning.

However, biases in datasets, such as the overrepresentation of Western classical music, can influence output styles, underscoring the need for ethical curation to foster inclusivity in AI-generated compositions.

GANs introduce a competitive dynamic to music generation, pairing a generator network that creates music with a discriminator network that evaluates its authenticity. The generator iteratively refines its output based on the discriminator’s feedback, striving to produce compositions indistinguishable from human-made music.

This adversarial process is particularly effective for stylistic emulation, such as generating jazz improvisations or EDM beats that align with genre-specific tropes. Over time, the generator learns to avoid repetitive or dissonant patterns, resulting in harmonically rich and rhythmically cohesive pieces.

Human collaboration remains critical in GAN-based systems. Musicians often fine-tune the generator’s parameters to emphasize specific traits, like emotional intensity or melodic complexity, or curate the discriminator’s training data to prioritize particular genres.

For example, a producer might train the discriminator on a curated set of orchestral scores to guide the AI toward cinematic soundscapes. This synergy allows GANs to serve as creative tools rather than autonomous composers, enabling artists to explore uncharted musical territories while retaining editorial control.

RNNs, particularly Long Short-Term Memory (LSTM) networks, excel at modeling sequential data by maintaining a “memory” of previous notes to inform future predictions. This makes them adept at generating melodies with coherent phrasing, as they can anticipate resolutions and avoid abrupt tonal shifts.

For instance, LSTM-based models trained on piano compositions reliably produce arpeggios and chord progressions that mirror the fluidity of human performance. However, their sequential processing limits scalability, as generating long compositions requires significant computational resources and time.

Transformers address these limitations through parallelized self-attention mechanisms, simultaneously analyzing relationships between all notes in a sequence. This allows them to capture long-range dependencies such as recurring motifs in a symphony more efficiently than RNNs. The Music Transformer, for example, generates compositions four times longer than earlier RNN-based models while maintaining structural coherence.

Transformers also enable conditional generation tasks, such as creating accompaniments for a given melody, by processing input and output sequences in tandem. Their ability to handle polyphonic textures and intricate harmonies has made them a preferred choice for generating complex musical arrangements.

A widespread misunderstanding about AI-generated music is that it consists of random sounds or lacks meaningful artistic merit. In reality, contemporary AI compositions demonstrate high sophistication, producing harmoniously sound, rhythmically stable music rich in stylistic detail.

These AI systems analyze vast amounts of musical data to understand complex patterns such as chord progressions, melodic contours, and rhythmic variations, allowing them to create pieces that often rival human compositions in quality and coherence. Listeners may find it difficult to distinguish between music crafted by AI and that produced by skilled musicians, underscoring the advanced capabilities of modern algorithms.

Although AI has not yet fully captured the emotional nuance and personal experiences that human artists infuse into their work, it delivers precise, innovative, and stylistically consistent music within the frameworks it has learned. Far from simple imitations, AI-generated pieces reflect a dynamic evolution as models become more advanced and training datasets grow more diverse.

This progression expands the creative horizons of music production and makes composing accessible to a broader audience, allowing both amateurs and professionals to experiment and innovate. Platforms like SendFame are equipping these advancements, enabling creators to distribute AI-assisted music globally, further democratizing the industry and opening new avenues for artistic expression.

AI music generation starts with a step that’s both essential and quite complex: collecting massive datasets of music and training AI algorithms on this musical data. These collections include a broad spectrum of musical elements, encompassing multiple genres, melodic lines, harmonic progressions, rhythmic patterns, and the emotional nuances of compositions.

Exposing AI models to such rich and diverse musical content allows the systems to identify and internalize the fundamental patterns and frameworks that define music. During training, these datasets—often in symbolic formats like MIDI files or raw audio recordings—are fed into sophisticated neural networks. These networks analyze the data to recognize recurring motifs, typical chord sequences, and familiar song structures.

Through this iterative learning, the AI builds a comprehensive knowledge base of musical styles and conventions, enabling it to replicate or innovate within these boundaries. This foundational step equips the AI with the ability to generate original compositions that are stylistically consistent and musically meaningful, setting the stage for subsequent phases of music creation.

After the AI has been sufficiently trained on extensive musical datasets, it moves into the stage of intricate pattern analysis. At this point, the system deeply explores the core elements of music, including melody, harmony, rhythm, and the emotional undertones that give a piece its unique character. The AI carefully examines how melodies progress and intertwine, how harmonies support and enhance the melodic lines, and how rhythmic structures provide momentum and drive to the composition.

This detailed examination allows the AI to grasp the subtle relationships and interactions between these musical components, essential for creating balanced and engaging music. The AI goes beyond the technical aspects of music by interpreting the emotional content embedded in lyrics or any textual input it receives. By analyzing the sentiment and mood conveyed through words, the system can tailor the musical output to match the desired emotional atmosphere, ensuring that the final piece resonates with the intended feeling or message.

This comprehensive pattern analysis enables the AI to produce structurally sound and emotionally compelling compositions. The music is aligned closely with the theme or lyrical content provided. The result is music that feels coherent, expressive, and authentically connected to its creative inspiration.

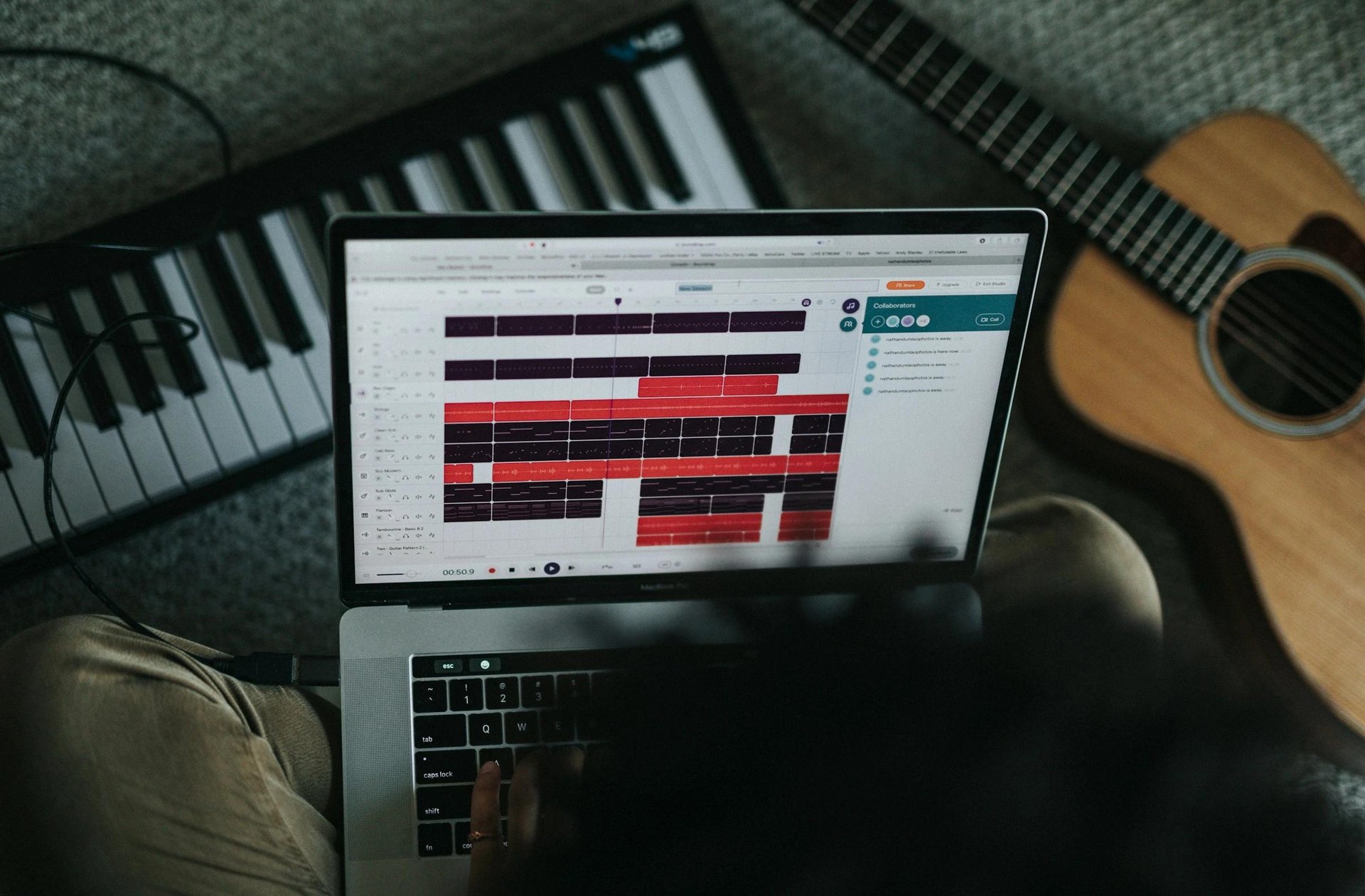

Generating music through AI typically follows two main approaches, each with a unique focus and output style. The first method, symbolic-based generation, involves creating music in abstract, symbolic formats such as MIDI files. This approach centers on individual notes' precise arrangement, timing, velocity, and dynamics, effectively outlining the musical score without producing actual sound. This symbolic representation allows for easy manipulation and editing of musical elements, making it a popular choice for composing melodies and harmonies that can later be rendered into audio by digital instruments.

On the other hand, audio-based generation takes a more direct route by synthesizing raw sound waves to produce fully formed audio tracks. This technique uses sophisticated neural networks that generate the actual sound, including vocals and instrumental timbres, resulting in a finished audio piece without further processing. SendFame’s AI Music Generator exemplifies this advanced approach by transforming user inputs such as lyrics, themes, or mood preferences into complete, polished songs.

The platform allows users to customize various aspects, such as music genres, beats, and emotional tones, or even provide their own lyrics and song titles. Within approximately 30 seconds, SendFame’s system crafts a whole song that integrates melodies, harmonies, and vocals, offering a smooth and efficient way to produce original music tailored to individual creative visions.

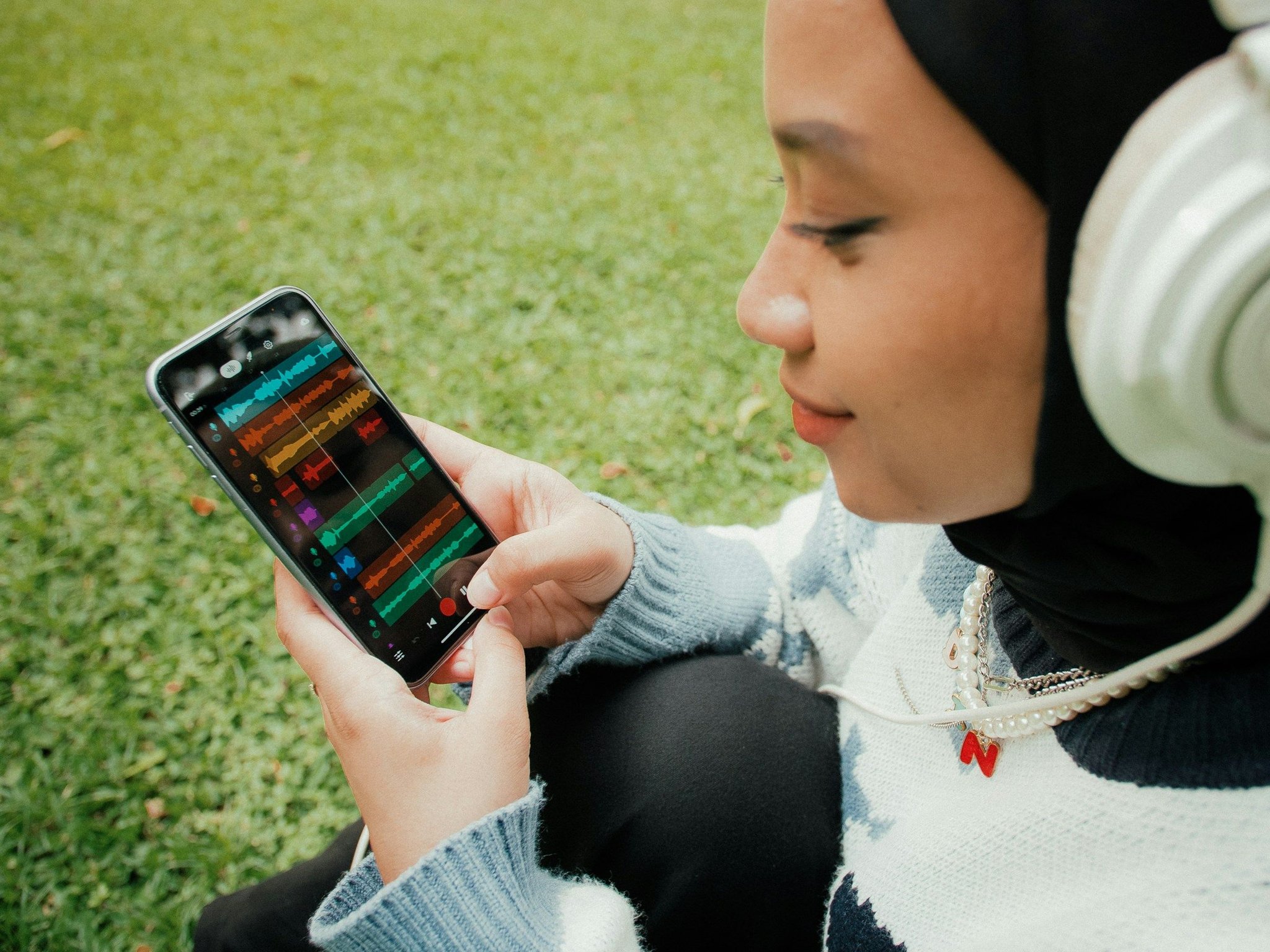

Despite the impressive capabilities of AI in generating music, human involvement remains a key component in refining and perfecting the final product. Once the AI produces an initial composition, creators can engage with the output by adjusting elements such as lyrics, song structure, and visual components like cover art. This hands-on editing allows users to infuse their touch and artistic preferences into the music, ensuring the result is technically sound, creatively authentic, and meaningful.

This collaborative process between AI and human creators is supported by continuous feedback loops, where user input is used to fine-tune the AI’s parameters or prompt the regeneration of specific song sections. By iterating on the music multiple times, the system becomes better aligned with the creator’s vision, enhancing the composition's emotional impact and overall quality. This cyclical refinement ensures that the music evolves beyond a generic output into a polished, expressive piece that resonates with the artist’s intent and the audience’s expectations, highlighting the importance of human creativity in the AI music production workflow.

SendFame differentiates itself by integrating multiple AI tools in a smooth workflow. First, it converts text input into emotionally aligned music and vocals, creating personalized and unique songs. The platform supports over 50 languages, enhancing accessibility and creative diversity.

Finally, users receive audio and AI-generated music videos, album covers, and visualizers, offering a comprehensive creative package. This holistic approach ensures that the music is technically sound, emotionally engaging, and visually appealing, leading to higher quality and more innovative results than many other AI music tools.

Artificial intelligence is transforming the music creation process, affecting how artists write, record, and refine their songs. According to recent reports, nearly 60% of musicians already use AI at various stages of their projects. AI tools help artists generate original melodies, harmonies, and beats, allowing them to explore unique soundscapes while easing the technical hurdles associated with music production.

AI can help musicians develop new ideas that inspire creativity and artistic expression by analyzing existing songs and compositions. The accelerating adoption of these technologies democratizes music creation, enabling more people to participate in the industry. AI can also help speed up the production process and change the future sound of music as we know it.

Artificial intelligence transforms how audiences find and interact with music, fundamentally reshaping the listening experience. Studies indicate that nearly three-quarters of internet users, around 74%, have engaged with AI-powered music platforms to discover new songs or share their favorite tracks. Streaming services employ sophisticated AI algorithms that meticulously analyze individual listening behaviors, preferences, and patterns.

By doing so, these platforms create highly personalized playlists and recommendations that resonate with each user’s unique taste, thereby significantly boosting engagement and satisfaction. This tailored approach makes music discovery more intuitive and keeps listeners returning for more, fostering deeper connections between fans and their favorite genres or artists.

The impact of AI-driven recommendation systems extends beyond enhancing user experience; they also play a vital role in shaping music consumption trends on major streaming platforms. More than half of the most-streamed songs owe their popularity, at least partly, to the influence of AI-curated recommendations.

This level of personalization helps artists gain visibility among listeners who are most likely to appreciate their style, effectively bridging the gap between creators and their target audience. Consequently, AI democratizes music discovery and allows artists by optimizing how their work reaches potential fans, creating a more efficient and dynamic music ecosystem for all stakeholders involved.

AI’s impact on entertainment rapidly expands beyond traditional music production and consumption, profoundly influencing virtual concerts, film scoring, gaming, and marketing strategies. Virtual concerts powered by AI technologies enable artists to perform in fully digital spaces, breaking down geographical barriers and allowing global audiences to participate in real-time or on-demand experiences.

These AI-enhanced events often incorporate real-time data analytics to adapt performances dynamically, such as modifying setlists based on audience reactions or integrating interactive visuals tailored to viewer preferences. In film and gaming, AI-generated soundtracks and adaptive music scores boost storytelling by responding fluidly to narrative shifts or gameplay actions, creating immersive atmospheres that heighten emotional engagement and player immersion.

AI transforms how music and related content are promoted in marketing by crafting highly personalized audio experiences and interactive campaigns. Machine learning algorithms analyze audience data to pinpoint the most receptive listener segments, enabling artists and labels to target promotions with greater precision and efficiency.

AI can also automate content scheduling and generate dynamic marketing materials that evolve based on real-time engagement metrics, freeing creators to focus more on artistry. These advancements demonstrate AI’s ability to integrate music into broader entertainment ecosystems smoothly, enhancing fan interaction and opening new revenue streams. However, balancing technological innovation with authentic human connection remains essential to maintaining the emotional resonance that defines music’s cultural value.

According to a Business Research Report, the AI in the music market is expected to grow exponentially in the next few years. It will grow to $10.43 billion in 2029 at a compound annual growth rate (CAGR) of 23.5%. This rapid expansion is driven by technological advancements, increased adoption of AI tools by musicians and producers, and the growing demand for personalized and immersive music experiences.

AI-generated music is anticipated to boost the overall music industry revenue by 17.2% in 2025, reflecting new revenue streams and business models emerging from AI integration. However, the rise of AI also presents challenges, such as concerns over the proper crediting of original artists and potential revenue shifts. Some studies warn of a possible 27% revenue decline for human creators by 2028 if compensation systems do not adapt.

Platforms like SendFame contribute to these trends by leveraging AI to allow artists in music creation, distribution, and fan engagement. By integrating AI-driven tools, SendFame helps musicians produce high-quality content efficiently while connecting them with personalized audience segments, thus enhancing both creative output and commercial success.

Artificial Intelligence is changing how music is made. AI-driven tools are already transforming music production, and AI’s role in music creation will continue to expand. In 2025, AI tools will become highly personalized, learning individual artists’ preferences like tonal balance and favored effects. These intelligent systems can automatically mix tracks while preserving the creator's unique sonic character.

They will also be able to generate melodies, harmonies, and full musical arrangements almost instantly, significantly speeding up the ideation and production phases. AI-driven mixing and mastering technologies will analyze audio in real time and provide tailored, genre-specific suggestions, considerably reducing the time spent on post-production without compromising artistic control.

The impact of AI extends far beyond the studio. It is fundamentally changing how people discover and engage with music. Approximately 74% of internet users now depend on AI-powered platforms to find new songs and share their favorite tracks. Listeners benefit from personalized playlists and recommendations finely tuned to individual tastes.

The sophistication of AI-generated music has reached a point where about 82% of listeners are unable to distinguish between compositions created by humans and those produced by machines. Blending human creativity with artificial intelligence opens new possibilities for audience interaction and content customization, allowing for a more immersive and tailored listening experience.

AI integration in music production encompasses many tools that assist at every creative process stage. These technologies enable precise audio editing, instrumental isolation, and smooth manipulation of complex recordings, making technical tasks more accessible and less time-consuming.

Automated systems for mixing and mastering adapt processing techniques to suit each track’s unique characteristics, delivering consistent, high-quality sound while preserving the artist’s vision. Additionally, AI-powered audio separation capabilities facilitate remixing and sampling by cleanly isolating individual elements within a mix.

Collectively, these advancements allow musicians to experiment freely, streamline workflows, and lower barriers to entry, fostering a more inclusive and innovative music industry.

Fusing blockchain technology with AI-driven music platforms is rapidly becoming a pivotal development in rights management and monetization. Blockchain’s core advantage lies in its ability to create transparent and tamper-proof records of music ownership and usage, which helps ensure that royalties are distributed fairly and efficiently.

This transparency significantly reduces conflicts over intellectual property by providing an immutable ledger accessible to all stakeholders. By leveraging blockchain, artists can tokenize their creative works as digital assets, opening up innovative revenue channels.

These tokenized assets enable direct transactions between fans and creators, bypassing traditional intermediaries such as record labels and streaming services. Thus, musicians are granted greater control over their earnings and creative rights.

The AI music industry is on the brink of remarkable expansion, with its market value projected to skyrocket from $6.2 billion in 2025 to an impressive $38.7 billion by 2033. Generative AI is expected to play a significant role within this growth, with its market worth rising from $2.92 billion in 2025 to $18.47 billion by 2034.

This rapid advancement suggests that AI technologies will become deeply integrated into every stage of music production, from initial composition and arrangement to mastering and distribution. As these tools evolve, they will enhance efficiency and transform creative possibilities, making AI an essential component of the music creation process. AI is anticipated to democratize music-making further, breaking down barriers that once limited access to professional-quality production.

This technology will allow beginners and seasoned artists to craft sophisticated music easily, fostering a new era of human-machine collaboration that could give rise to entirely new genres and sonic landscapes. Additionally, AI’s ability to personalize music experiences will become increasingly refined, adapting playlists and compositions to match listeners’ moods, environments, and tastes, thereby deepening engagement and emotional connection.

Despite these exciting prospects, the industry must also address important ethical questions, such as ensuring proper credit for artists and managing the impact of AI on traditional creative roles. Striking a balance between innovation and fairness will be crucial as the music world navigates this transformative period.

SendFame offers a clean and simple platform for creating royalty-free music and videos with AI. The company’s technology analyzes user input, such as text prompts, to compose complete songs with vocals, lyrics, and instrumentals across various genres within minutes.

This approach allows users without deep musical expertise to efficiently bring their creative ideas to life. With SendFame, you can create AI music covers and original songs in minutes, rather than hours or days, so you can focus on your project instead of getting bogged down in production.

The biggest draw of SendFame is speed. The platform can generate royalty-free music in minutes, giving users plenty of time to focus on the creative aspects of their projects. In addition to music, SendFame also integrates AI-generated celebrity-style video messages and dynamic visuals to help creators produce engaging multimedia packages that combine sound and imagery for social media, marketing, or personal use. You can complete your next viral-ready music or video project without complex production processes or years of music training.

SendFame is excellent for all users, including content creators, marketers, and individuals looking to generate music and video for personal use. The platform’s focus on speed and user-friendliness makes it suitable for any project, from business marketing to personal entertainment. With a growing community of over 170,000 users, SendFame demonstrates broad appeal and practical utility.

SendFame emphasizes ethical AI use, supporting artists by accelerating creative workflows rather than replacing human artistry.

SendFame is a powerful AI tool that allows users to create celebrity covers from any song in seconds. Instead of just a few boring preset voices, SendFame has a massive library of hyper-realistic voices. Just upload a track, pick a voice, and watch the AI recreate your song with stunning new vocals. You can even customize the lyrics to create the perfect personalized cover. It's a great way to make your existing music more interesting, create funny parody songs, or produce something unique for a special occasion.

Create Epic

SendFame