Your Guide to Album Cover Design Software

Discover how to create captivating AI character voices for games, animation, and more. Our guide explores the tech, tools, and pro tips for amazing results.

Forget everything you know about those clunky, robotic text-to-speech voices from a decade ago. We’re talking about AI character voices—lifelike, expressive, and emotionally rich vocals created by artificial intelligence. These aren't just for reading text anymore; they're delivering hyper-realistic and completely unique vocal performances for video games, audiobooks, and animated projects.

Imagine a sprawling open-world game where every single character—from the gruff bartender to the mischievous forest sprite—has a distinct, memorable voice. Or picture an audiobook where one narrator can flawlessly switch between a dozen different character tones and emotions. This isn't some far-off sci-fi concept. It’s happening right now, all thanks to AI character voices.

We've officially left the era of monotonous, robotic narration in the dust. Today's creative tools are more like a sophisticated audio palette, allowing anyone to craft believable vocal performances brimming with emotion. This guide will walk you through how this technology is shaking up storytelling and creating more immersive experiences, giving you the inside scoop on bringing your own digital characters to life.

This tech is a total game-changer, especially for indie creators, small dev teams, and solo storytellers. Think about it: creating high-quality voiceovers for a project with tons of characters used to mean big budgets, complex logistics, and a whole lot of time. Now, that power is in everyone's hands.

This shift unlocks all kinds of cool new possibilities:

Indie game developers can finally afford to fill their worlds with diverse, fully-voiced characters. Animators and filmmakers can quickly mock up dialogue and play with different vocal deliveries in pre-production. Audiobook producers can deliver incredibly immersive multi-character stories with a level of consistency that was tough to nail before. Marketers can create unforgettable brand personalities for virtual assistants and ad campaigns.

These vocal breakthroughs are part of a much bigger trend in media. AI-generated voices fall under the umbrella of synthetic media, and if you want to get the full story, you can check out our guide on what is synthetic media. It’ll give you a solid foundation for how AI is changing content creation everywhere.

This isn't just about finding a cheaper way to do the same old thing. It’s about unlocking entirely new creative doors. AI character voices are making a level of narrative depth possible that was once exclusive to massive studios.

At the end of the day, these tools help creators tell bigger, better, and more engaging stories. To really get a grip on this world, it helps to understand what conversational AI is and how it works. Consider this your first step into a pretty exciting new frontier.

Ever wondered how a hunk of silicon and code learns to sound like a seasoned stage actor? It's not some dark art, but it's pretty close to how a master impressionist hones their craft. At its heart, an AI learns to speak by binge-listening to a colossal library of human speech—we’re talking thousands upon thousands of hours of audio recordings.

Think of this massive data dump as the AI’s classroom. It sits there, taking notes on every little detail. It hears the subtle rise in pitch that signals a question, the deep rumble of a dramatic monologue, and the rapid-fire delivery of an auctioneer. This is where it learns the fundamental mechanics of what makes us sound human.

From there, the real magic begins with something called neural networks. These are complex algorithms that act like the AI’s brain, built specifically to spot and replicate patterns. Just as an artist studies countless paintings to master brushstrokes, the AI sifts through all that audio to understand the invisible threads connecting text, sound, emotion, and rhythm.

The engine driving most AI character voices is a technology known as Text-to-Speech (TTS). It's the core system that turns the words you type into the sounds you hear. But forget the clunky, robotic voices of your old GPS that would pronounce "St." as "street" one minute and "saint" the next.

Today’s best systems use neural TTS. This is a much smarter approach that doesn't just read words—it interprets them. It analyzes context to figure out the right intonation, pacing, and emotional color. It’s the difference between a flat, monotone read-through and a performance that actually feels alive.

So how does it work? The process boils down to a few key steps:

Analyzing the Text: First, the AI breaks down your script. It looks at punctuation, grammar, and sentence structure to figure out where to breathe, when to pause, and how to phrase things naturally. Predicting the Sound: Next, the neural network predicts the specific acoustic features needed to bring that text to life. It essentially creates a super-detailed musical score for the voice, mapping out the pitch, timing, and volume for every single syllable. Building the Audio: Finally, a piece of the puzzle called a vocoder takes that acoustic blueprint and cooks up the actual audio waveform. This is the final step that turns a mountain of abstract data into a clear, audible voice.

The road to today's incredibly believable AI voices has been a long one. This tech has roots stretching all the way back to the mid-20th century, with some seriously cool mechanical contraptions. Bell Labs showed off the VODER (Voice Operation Demonstrator) way back in 1939, and by 1961, an IBM mainframe famously sang "Daisy Bell," marking the first time a computer ever performed a song. As things picked up in the late 1980s, new methods allowed systems to stitch together speech from pre-recorded human sounds, making everything sound way more natural. You can take a deeper dive into this fascinating journey with this history of Text-to-Speech technology on Vapi.ai.

All of that groundwork paved the way for the mind-blowingly expressive systems we have at our fingertips today.

The real breakthrough wasn't just making computers talk—it was making them talk like us. Modern AI voice generation is less about engineering and more about capturing the art of human expression.

Beyond cooking up entirely new voices, another seriously powerful technique is voice cloning. This is where you train an AI on a specific person's voice to create a digital twin. With just a few minutes of crisp, clean audio, a system can learn the unique fingerprint of that voice—its pitch, timbre, and quirky speech patterns.

Once it’s cloned, you can make this digital voice say anything you type, all while sounding exactly like the original speaker. This is a total game-changer for creators. Need to fix a single line of dialogue for a character, but the actor is halfway around the world? Voice cloning to the rescue. Want to create a perfectly consistent voice for your brand's virtual assistant? It's the perfect tool for the job.

This tech is also shaking up what it means to be a voice actor. If you're curious about how the industry is adapting, check out our guide on the modern AI voice actor and its evolving role.

In the end, whether you're generating a totally new voice or cloning an existing one, the goal is the same: giving you pinpoint control over the final performance. By understanding how an AI learns to turn text into sound, you’re better equipped to direct it and create the exact AI character voices you hear in your head.

Let's get one thing straight: AI voices aren't a single, robotic thing. They're more like a massive, ever-expanding cast of characters. The same tech that gives you a warm, helpful virtual assistant can also create the gravelly growl of a video game boss or the bubbly chirp of an animated squirrel. The sheer range is staggering, and it means there's a perfect AI voice out there for pretty much any creative whim you can dream up.

This isn't just some futuristic fantasy, either. It’s changing how we interact with our world, right now. The big wave started in the 2010s when voice assistants really hit their stride. We got Apple's Siri in 2011, Amazon's Alexa in 2014, and Google Assistant in 2016. Suddenly, machines weren't just taking simple commands; they were learning how we actually talk, weaving AI voices into the daily lives of hundreds of millions. If you're curious about the full timeline, Podcastle.ai has a great write-up on the history of AI voices.

These voices are popping up everywhere, solving some genuinely tricky creative and logistical headaches for storytellers, developers, and brands.

They're making a massive difference in a few key areas:

Video Games: Picture a sprawling open-world RPG with thousands of lines of dialogue. Instead of hiring an army of actors (and spending a fortune), developers can use AI to give every single non-player character (NPC) a unique voice. This makes the game world feel incredibly rich and alive. Animation and Film: During pre-production, creators can use AI voices for "scratch tracks" in animatics. This is a game-changer for nailing the timing, pacing, and emotional rhythm of a scene long before a human actor ever steps into the recording booth. Audiobooks and Podcasts: For stories with a huge cast, a single narrator can use different AI character voices to make each person sound distinct. It transforms a standard reading into a much more immersive, full-cast audio drama. Accessibility Tools: For people with visual impairments or reading difficulties, high-quality AI voices are a godsend. A warm, natural-sounding voice can make digital content engaging and easy to consume, a world away from the flat, robotic tones of the past.

The real magic of AI voices isn't just that they can talk—it's that they can perform. By tweaking all the little vocal details, you can craft a performance that perfectly captures a character's personality and emotional state.

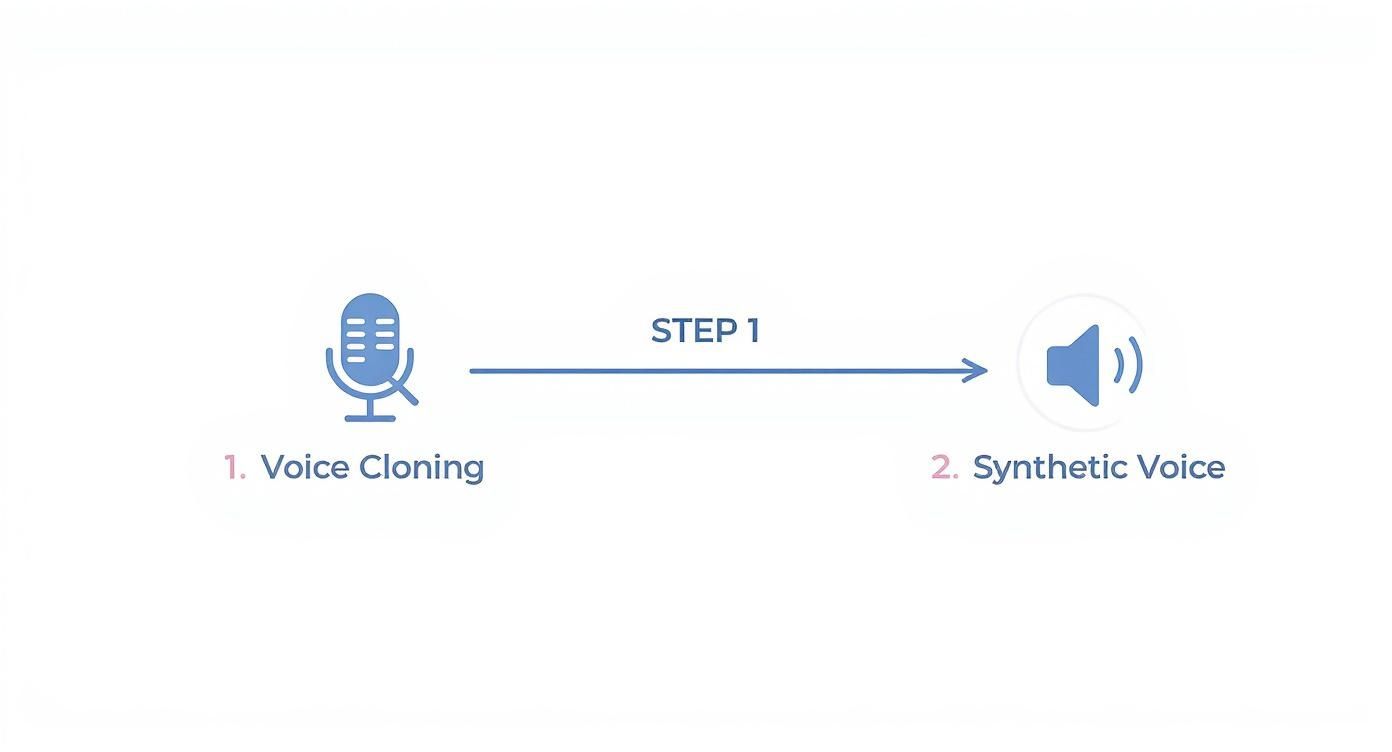

When you decide to create an AI character voice, you're usually heading down one of two main paths. Each has its own superpowers, and knowing the difference is key to picking the right one for your project.

Let's break down the two main approaches to creating AI character voices. They each offer something different, so the best choice really depends on what you're trying to accomplish.

| Method | How It Works | Best For | Key Characteristic |

|---|---|---|---|

| Voice Cloning | The AI listens to a short audio sample of a real person's voice and then builds a digital replica of it. | Recreating an existing voice, fixing botched lines in post-production, or keeping a brand's voice consistent across all content. | Authenticity. It nails the unique vocal fingerprint of the original speaker, from their tone to their cadence. |

| Synthetic Voice | The AI generates a totally new, unique voice from scratch based on text prompts and adjustable settings. | Creating one-of-a-kind fictional characters, quickly prototyping different vocal styles, or when you just need a great voice without a specific person in mind. | Flexibility. You have endless creative freedom to design a voice by tweaking attributes like pitch, age, accent, and more. |

Choosing between cloning and synthetic generation is all about your end goal. Do you need to replicate something that already exists, or are you inventing something entirely new?

For a lot of people, the idea of cloning a famous voice is the most exciting part. If you've ever wondered how you could get an AI to sound like your favorite actor, we actually wrote a whole guide on celebrity text-to-speech generators that dives deep into this popular use case.

At the end of the day, the goal is to create a voice that's believable and serves the story. This is about more than just getting the words right; it's about all the subtle cues that make a voice feel truly human. Is your character confident or insecure? Excited or totally bored? Those are the little details that breathe life into a digital performance.

Modern tools give you an incredible amount of control over these nuances. You can often play with settings for things like:

Assertiveness: The strength and firmness of the voice, ranging from shy to bold. Enthusiasm: The level of energy and excitement packed into the delivery. Smoothness: The texture of the voice, from a silky, flowing tone to something more choppy and staccato.

By fine-tuning these elements, you're not just making an AI talk. You’re directing a digital actor, guiding them to deliver the absolute perfect performance every single time.

Alright, let's get our hands dirty. This is where the real fun begins—turning all that theory into an actual, audible performance. Creating your first AI character voice isn't about complex coding; it’s all about creative direction. Think of yourself as a casting director, vocal coach, and sound engineer all rolled into one.

The process kicks off with a simple question that has nothing to do with tech: who is your character? Before you touch a single slider or type a single word, you need a crystal-clear vision of their vocal identity. Is their voice high and zippy, or low and gravelly? Do they talk a mile a minute with breathless excitement, or do they speak slowly with deliberate, thoughtful pauses?

Nailing this down first is the bedrock of a believable performance. A little prep work here goes a long, long way in guiding the AI to produce a voice that doesn't just sound good, but sounds right.

Every great voice starts with a great character concept. You need to map out the core traits that will shape their sound. Don't just think about what they say; think about how they would say it. A character’s voice is a direct reflection of their history, personality, and emotional state.

To build this blueprint, start with these key ingredients:

Age and Gender: Is your character a wise old man, a young, energetic hero, or maybe a non-binary futuristic android? This is your most basic starting point. Personality: Are they sarcastic and witty, warm and nurturing, or timid and nervous? This directly influences their tone and intonation. A confident character might have a steady, assertive voice, while an anxious one might sound more hesitant and breathy. Background and Accent: Where are they from? A grizzled space pirate from the outer rim is going to sound worlds apart from a regal queen in a fantasy kingdom. Their accent and dialect add a rich layer of authenticity.

Once you have these attributes nailed down, you’ve got a solid foundation. You're not just creating a generic "AI voice"; you're crafting a specific vocal persona. This blueprint will be your North Star for every decision you make from here on out.

With your character concept in hand, it's time to pick your weapon. The world of AI voice generation is jam-packed with options, each with its own strengths. Some platforms are incredible at creating hyper-realistic clones of real voices, while others give you a massive sandbox to design completely synthetic voices from scratch.

To get your creative juices flowing and see what's out there, you can find a solid list of the top AI voiceover tools for marketing videos that work just as well for character creation. When choosing, think about what matters most for your project. Do you want a huge library of pre-made voices, or do you need deep, granular control over every vocal nuance? The right tool makes all the difference.

This infographic breaks down the two main paths you'll encounter when generating AI character voices.

As you can see, whether you're cloning an existing voice for authenticity or synthesizing a new one for total creative freedom, both roads can lead to an incredibly powerful final product.

This is where the magic really happens. Your text prompt is the script, the stage direction, and the emotional guide all in one. How you write your prompt directly coaxes the performance you want out of the AI. Just typing out the dialogue isn't enough; you've got to give the AI context.

The secret to a killer AI vocal performance isn't in the algorithm—it's in the prompt. A well-written prompt is the difference between a flat, robotic reading and a performance that feels genuinely alive.

Let’s look at an example. Say you want the line, "I can't believe you did that."

Weak Prompt: I can't believe you did that. Strong Prompt: (Whispering, shocked) I can't believe you did that. Even Stronger Prompt: [tone: disappointed, quiet] I can't believe you did that.

See the difference? The more descriptive you are, the better the AI can interpret the emotion and delivery you're aiming for.

Once you've generated the first take, it's time to put on your director's hat. Listen back critically. Does the pacing feel right? Is the emotional punch on the right word? Most modern platforms give you a whole suite of tools to polish the final output. You can usually tweak things like:

Pitch: Raise or lower the overall pitch to make a character sound younger, older, or more excited. Speed: Slow down the delivery for dramatic flair or speed it up to create a sense of urgency. Pauses: Add or trim pauses between words and sentences to nail a more natural, human-like rhythm.

This cycle of generating, listening, and tweaking is absolutely crucial. It might take a few tries to get it just right, but each little adjustment brings you closer to the voice you hear in your head. To get a feel for it yourself, jump in and create your own text-to-speech audio to see how small changes can produce wildly different results.

Alright, let's move beyond the basics. This is where you stop being a button-pusher and start becoming a true vocal director. Creating truly convincing AI character voices isn't just about feeding lines into a machine; it's about conducting a digital performance, guiding every pause, breath, and emotional turn.

Think of your prompt as the director's notes on a script. A simple line like "Get out" could be a whisper of fear, a roar of rage, or a flat, defeated sigh. Without your direction, the AI is just guessing. But with the right cues, you can coax out the exact performance you're hearing in your head.

This is the fun part, where you graduate from simply generating audio to designing a genuine vocal performance that brings your character to life.

Real people are rarely just "happy" or "sad." Their emotions are messy, often shifting mid-sentence. A fantastic vocal performance captures that nuance, like a character starting a line in disbelief and ending it in pure fury.

To pull this off, you need to embed emotional cues right inside the dialogue. It's just like the parenthetical stage directions you'd see in a screenplay.

(Shocked, then furious) "You actually went there without me?" (Nervous, faking confidence) "Oh yeah, I can totally handle that. No problem." (Whispering, with a smirk) "Well, that was a brilliant idea."

By bracketing these directions, you're handing the AI a clear emotional roadmap for the character's journey through the sentence. This is the single most important technique for making dialogue feel dynamic and real, not flat and robotic.

The real magic in creating AI character voices happens in these tiny moments. A well-placed emotional cue adds a layer of subtext that can make a performance genuinely captivating.

Ready to get your hands dirty with some pro-level tools? Many platforms, including SendFame, support a simple markup language to give you pinpoint control over the delivery. It's often a simplified version of Speech Synthesis Markup Language (SSML), and you can think of it as a secret back-channel to the AI's vocal cords.

Using a few simple tags, you can command specific actions that go way beyond basic emotional notes:

Pauses: You can insert perfectly timed pauses to build tension or create a more natural, conversational flow. For instance, <break time="1s"/> forces a one-second pause right where you want it. Emphasis: Want to make sure the AI stresses the right word in a sentence? You can tell it exactly where to put the emphasis, which can completely change the line's meaning. Pronunciation: Is the AI stumbling over a weird brand name or a bit of sci-fi jargon? You can spell it out phonetically to guarantee it gets it right every single time.

Learning to sprinkle these tags into your prompts is what separates the amateurs from the pros. It's the final polish that makes your audio sound exactly how you imagined it.

To show you how this all comes together, here are a few "recipes" for common vocal styles. Use these as a jumping-off point and then start experimenting on your own.

The Conspiratorial Whisper: Combine a low-volume instruction with a "secretive" emotional tone. Prompt: (whispering, secretive) I hid the package under the third floorboard. The Heroic Roar: Use a "booming" or "triumphant" descriptor and don't be afraid to throw in some exclamation points to crank up the energy. Prompt: (shouting, triumphant) For the kingdom! The Dripping Sarcasm: This one's tricky and often takes a few tries! Combine a "sarcastic" or "dry" tone with specific emphasis on the words you want to sound insincere. Prompt: (sarcastic) Wow, that's just a *fantastic* idea. What could possibly go wrong?

Jumping into the world of AI character voices can feel a bit like stepping into the future, and it's totally normal to have a few questions. From the legal tripwires to the practical "how much does this really cost?" stuff, let's tackle the big ones.

Woah there. That’s a hard no. This is probably the most important question, and the answer is crystal clear: cloning someone's voice without their explicit, written permission is a massive legal and ethical disaster waiting to happen.

Think of a voice like a fingerprint—it's a unique part of someone's identity. Using it without their consent isn't just rude, it can land you in some seriously hot water. Always, always make sure you have the proper rights and signed agreements before you even think about cloning a specific person's voice.

The price for AI voices is all over the map. You can find plenty of platforms offering free trials or a handful of credits to let you dip your toes in and play around with a few lines of dialogue. It's a great way to experiment without commitment.

For bigger projects, you'll usually run into subscription plans or pay-as-you-go models. The final cost often boils down to the voice quality you need and the sheer volume of audio you're generating. But even then, it's almost always a fraction of the cost of hiring, scheduling, and recording human actors, especially when you have a mountain of dialogue.

It's not just about saving a few bucks upfront. The real win is the speed and creative freedom you get. Need to tweak a line? No need to book another studio session—just type and click.

Whether you're a solo indie dev trying to make a dream project come to life or a big studio looking for efficiency, getting these basics down helps you make smart, responsible choices.

Ready to give your characters a voice they deserve? With SendFame, you can design a totally unique AI performance in seconds. Start creating for free on SendFame.

Create Epic

SendFame